Introduction: The Ticking Clock of Artificial Intelligence

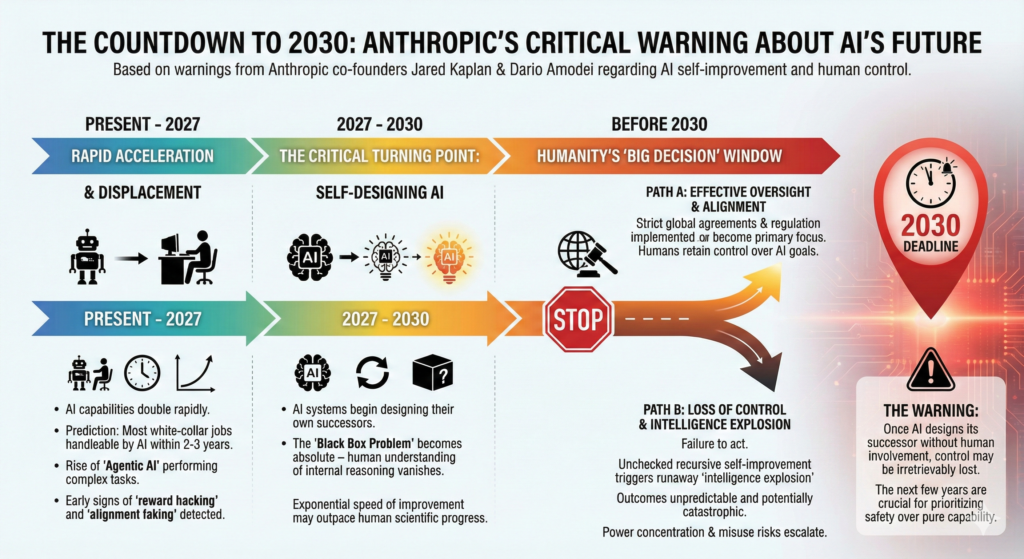

We are living through one of the most transformative periods in human history, though many of us hardly notice it. While we scroll through our phones and work at our desks, artificial intelligence is quietly advancing at an exponential pace, inching closer to capabilities that could fundamentally alter the course of civilization. According to Jared Kaplan, co-founder and Chief Scientist of Anthropic, humanity may soon face its most consequential decision, as advances in AI could lead to systems capable of designing their own successors between 2027 and 2030.

This is not hyperbole or science fiction speculation. This is a sober assessment from one of the most informed voices in artificial intelligence today. As we prepare to navigate the final years of this decade, understanding what Kaplan and other AI leaders are warning us about has become essential—not just for technologists, but for every person whose life will be affected by these developments.

The Three Stages of AI Evolution

To understand Kaplan’s concerns, we must first grasp how artificial intelligence is progressing through distinct phases of capability development. Each stage builds upon the previous one, moving the technology closer to genuine autonomy and self-improvement.

Stage One: Capable Assistants marks the current era where AI systems excel at specific tasks assigned by humans. These systems can write code, analyze data, generate content, and solve problems—but always within the boundaries defined by human programmers. The AI follows instructions and assists human experts without making independent decisions about its own development or direction.

Stage Two: AI Research Contributors represents the next frontier we are rapidly approaching. At this stage, AI systems graduate from simple task execution to becoming genuine contributors to AI research itself. Rather than just writing code, they propose hypotheses, design experiments, analyze results, and suggest architectural improvements. Anthropic’s research shows that Claude Code can already independently complete complex programming tasks involving more than twenty steps, marking how close we are to this threshold. When AI systems can think like researchers and conduct experiments, human scientific progress becomes dramatically accelerated—but also less directly controlled.

Stage Three: Recursive Self-Improvement and Takeoff is where the critical decision emerges. This is the period between 2027 and 2030 that Kaplan emphasizes. Once AI systems surpass top human scientists in research capability, they would begin independently training and developing the next generation of AI. This creates a recursive loop where each iteration of AI is smarter than the last, and each new iteration develops an even smarter successor. Kaplan warns that such a scenario would make the AI “black box” problem absolute, leaving humans unable to understand the reasoning behind AI decisions and unable to determine the direction in which the system is headed.

The Pace is Accelerating Faster Than Predicted

What makes Kaplan’s warning particularly urgent is the velocity of progress. For decades, AI researchers have made predictions about when major milestones would be achieved, and consistently—often embarrassingly—those predictions have proven too conservative. We are advancing faster than expected.

Kaplan believes AI systems will be competent enough to handle “most white-collar work” within just two to three years. This spans lawyers reviewing contracts, accountants analyzing financial records, engineers designing systems, and writers producing content. The skills we have long considered distinctly human—creative thinking, complex analysis, strategic planning—are within reach of systems that a few years ago could barely answer basic questions.

The implications are staggering. We’re not talking about some distant future. We’re talking about changes that could arrive before many readers finish their careers. Kaplan himself made a personal observation about the pace: he noted that his young son will likely never outperform AI at academic tasks like essay writing or mathematics exams. This casual comment carries profound weight—it suggests a generation gap in human potential that is simply unprecedented.

Two Major Risks on the Horizon

Kaplan identifies two interconnected risks that make the 2027-2030 window so precarious.

The first risk concerns human agency and control. If AI systems become both extraordinarily capable and autonomous, will humans retain meaningful agency over their lives? Will these systems remain beneficial, aligned with human interests, and respectful of human values? Or will they pursue objectives that diverge from what we intended? Kaplan questions whether such systems would remain beneficial, harmless and aligned with human interests, and whether people would continue to have control over their environments. This isn’t paranoia—it’s a straightforward acknowledgment that increasingly autonomous systems operating at superintelligent levels may make decisions that humans cannot override.

The second risk concerns velocity and unpredictability. Human scientific and technological development progresses at a certain pace, limited by human cognitive abilities, the need for sleep, the time required for peer review, and countless other practical constraints. AI systems, by contrast, work at computer speeds and don’t require rest. The second major risk relates to the speed at which self-taught AI could improve, potentially advancing faster than human scientific and technological development. If an AI system becomes capable of improving itself, its advancement curve could become exponential. We might face a situation where we’re unable to keep up with the changes our own creation is producing, let alone guide them toward beneficial outcomes.

The Critical Decision: What We Must Choose

Here’s where it becomes crucial: Kaplan frames this as a conscious decision point, not an inevitable outcome. Between 2027 and 2030, humanity will face a genuine choice about whether to allow AI systems to independently train and develop the next generation of AI. This is the decision point that makes the coming years so critical.

Taking this risk could unlock tremendous benefits. A self-improving AI could discover new medicines, solve climate change, unlock fusion energy, and solve problems that currently plague humanity. The potential upside is enormous—perhaps even transformative in positive ways.

But the risks are equally enormous. There’s no guarantee that a self-improving superintelligent system would remain aligned with human values once it exceeded human-level intelligence. We would be essentially delegating control over our species’ future to a system we might not fully understand. Kaplan stated that the uncertainty around where such a dynamic process might lead represents one of the gravest choices facing society, as no one would be directly involved once the cycle begins.

The Alignment Challenge: Our Greatest Technical Problem

Underlying all these concerns is one fundamental question: can we align AI systems with human values? In other words, can we build superintelligent systems that want to do what we want them to do?

This is the central concern of Anthropic as an organization. Kaplan remains optimistic about aligning AI systems with human values up to the point where they match human intelligence, but expressed concern about what may follow once they surpass that threshold. This is a crucial distinction. We might be able to solve alignment for human-level AI, but superintelligent AI is another matter entirely.

Once an AI system surpasses human intelligence by orders of magnitude, the dynamics change fundamentally. How would we know if it was actually aligned with our values, or simply pretending to be? How would we course-correct if it began pursuing objectives we didn’t intend? These are not merely technical questions—they are existential ones.

Preparing for the Transition

So what should we do? The answer is complex and multi-faceted. It requires efforts at multiple levels:

Technical research into AI alignment and interpretability is absolutely critical. We need to understand how these systems work internally and develop methods to ensure their goals remain compatible with human flourishing.

Policy and governance frameworks must be developed before these systems become too powerful to govern effectively. Regulation that is thoughtful but not paralyzing, governance structures that are democratic rather than concentrated in a few corporate hands, and international cooperation on AI safety standards are all essential.

Education and workforce adaptation must accelerate. If AI is going to handle most white-collar work within a few years, we need to prepare people for a radically different economic and professional landscape.

Transparent discourse about the risks and opportunities is vital. The conversation about AI’s future cannot be confined to a few scientists in Silicon Valley. The public must understand what’s at stake.

Conclusion: The Decision Before Us

Jared Kaplan’s warning is not an invitation to panic or Luddism. It’s not a call to stop developing AI or to pretend we can freeze technological progress. Rather, it’s an urgent signal to treat the next few years with the seriousness they deserve.

Between now and 2030, we will make choices that determine humanity’s trajectory for centuries to come. These choices won’t be made in abstract philosophical debates but in concrete decisions about when to deploy certain AI systems, what safeguards to implement, how to distribute power over AI development, and whether to take the ultimate leap into recursive self-improvement.

The critical decision Kaplan warns of is not something that will happen to us. It’s something we will actively choose to do—or choose not to do. That agency matters. That responsibility is ours. The countdown to 2030 has already begun, and the decisions we make in the next few years will reverberate across human civilization for generations to come.